Moonshot AI, a Chinese startup founded in 2023, released Kimi K2 Thinking. It's free, open source, and performing as well as OpenAI's flagship GPT-5 model on key benchmarks.

This matters because GPT-5 is a paid, proprietary system that costs serious money to access. K2 Thinking is available to anyone, for free, with full code and model weights published on Hugging Face.

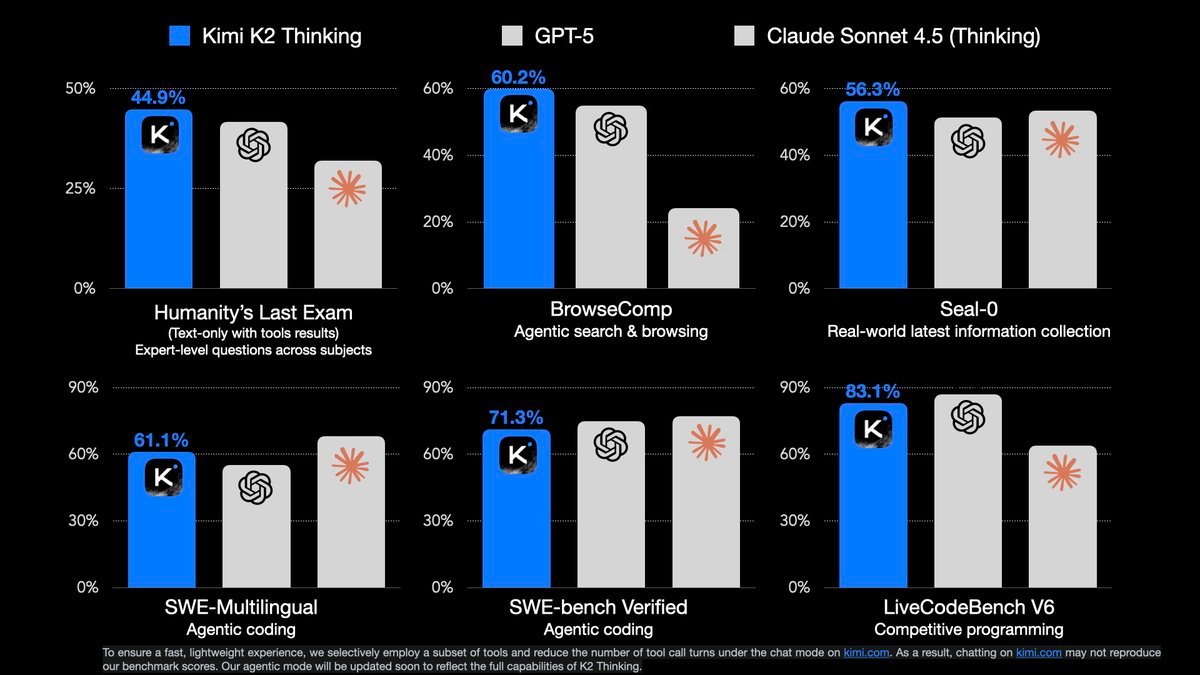

The performance numbers are striking

K2 Thinking achieved 44.9% on Humanity's Last Exam, 60.2% on BrowseComp (an agentic web search and reasoning test), 71.3% on SWE-Bench Verified, and 83.1% on LiveCodeBench v6. Those are coding evaluations where performance directly translates to real world utility. [data published by moonshot]

On BrowseComp specifically, K2 Thinking's 60.2% decisively beats GPT-5's 54.9% and absolutely crushes Claude Sonnet 4.5's 24.1%. It also edges GPT-5 on GPQA Diamond (85.7% versus 84.5%) and matches it on mathematical reasoning tasks like AIME 2025 and HMMT 2025.

Only in certain heavy mode configurations, where GPT-5 aggregates multiple trajectories, does the proprietary model regain parity.

Beating last week's champion

Just two and a half weeks ago, another Chinese model called MiniMax M2 was being called the "new king of open source LLMs." It achieved impressive scores that placed it near GPT-5 level capability.

K2 Thinking just blew past it. Its BrowseComp result of 60.2% exceeds M2's 44.0%, and its SWE-Bench Verified score of 71.3% edges out M2's 69.4%.

The speed of advancement is remarkable. Two Chinese open source models in quick succession, each surpassing the last, now matching or exceeding what OpenAI charges significant money to access.

How it works

K2 Thinking is a Mixture of Experts model built around one trillion parameters, with 32 billion activating per inference. It combines long horizon reasoning with structured tool use, executing up to 200 to 300 sequential tool calls without human intervention.

The model outputs an auxiliary field called reasoning_content, revealing intermediate logic before each final response. This transparency preserves coherence across long multi turn tasks and multi step tool calls.

A reference implementation shows the model autonomously conducting a "daily news report" workflow, invoking date and web search tools, analyzing retrieved content, and composing structured output while maintaining internal reasoning state.

Surprisingly affordable

Despite its trillion parameter scale, K2 Thinking's runtime cost remains modest. Moonshot lists usage at $0.15 per 1 million tokens (cache hit), $0.60 per 1 million tokens (cache miss), and $2.50 per 1 million tokens output.

Compare that to GPT-5 at $1.25 input and $10 output. K2 Thinking is an order of magnitude cheaper while matching or exceeding performance.

The licensing catch

Moonshot released K2 Thinking under a Modified MIT License. It grants full commercial and derivative rights, meaning researchers and developers can use it freely in commercial applications.

There's one restriction. If your software or derivative product serves over 100 million monthly active users or generates over $20 million per month in revenue, you must prominently display "Kimi K2" on the product's user interface.

For most research and enterprise applications, this functions as a light touch attribution requirement while preserving standard MIT licensing freedoms.

What this means

The timing is awkward for OpenAI. Just a week ago, OpenAI CFO Sarah Friar sparked controversy by suggesting at a WSJ Tech Live event that the US government might eventually need to provide a "backstop" for the company's more than $1.4 trillion in compute and data centre commitments.

Although she later clarified OpenAI wasn't seeking direct federal support, the episode reignited debate about the scale and concentration of AI capital spending.

Now a Chinese startup with a fraction of OpenAI's resources has released a free model matching GPT-5's performance. If enterprises can get comparable or better performance from a free, open source Chinese model, why would they continue paying for proprietary alternatives?

Airbnb has already raised eyebrows for admitting to heavily using Alibaba's Qwen over OpenAI's offerings. K2 Thinking will likely accelerate that trend.

The gap between closed frontier systems and publicly available models has effectively collapsed for high end reasoning and coding. That's not just a technical milestone. It's a strategic one.