Over the past decade, artificial intelligence has evolved from an academic curiosity into the engine of modern digital transformation. At the heart of this evolution lies one invisible metric — training computation. Measured in total floating-point operations (FLOPs), this quantifies the number of arithmetic operations used to train an AI model. According to Epoch AI (2025) and Our World in Data, the compute required to train leading AI systems has grown exponentially, increasing by over 300 million times between 2012 and 2025.

This report examines the forces behind this growth — architectural, algorithmic, economic, and geopolitical — and reflects on the consequences for efficiency, sustainability, and governance. The findings reveal that the exponential climb in compute reflects both human ambition and systemic inequality: while a few frontier labs dominate the landscape through massive resource investments, others pivot toward efficiency and innovation at the edge.

Introduction: Computation as the Currency of Intelligence

Artificial intelligence’s success has been driven less by conceptual breakthroughs than by relentless improvements in computational scale. Every generative model, image recogniser, or decision engine is the product of trillions of iterative mathematical steps. Computation is, in effect, the currency of machine intelligence.

In the early years of AI, progress was constrained by hardware. From the 1950s through the 1990s, models like the Perceptron or Elman networks operated on limited computing power. The modern era, however, has flipped the equation: hardware and cloud infrastructure now expand faster than theoretical limits once predicted. FLOPs — once an esoteric benchmark used by supercomputing researchers — have become the global measure of AI power.

Epoch AI’s dataset visualises this clearly. Each AI system is represented as a circle plotted by publication date and training computation in petaFLOPs. The curve rises almost vertically after 2017 — marking the onset of the Transformer era.

Historical Context: From Perceptrons to Planet-Scale Models

The story of AI’s computational growth is also the story of technological compounding.

Early neural models could be trained in minutes on single-core CPUs, performing mere thousands of FLOPs. Hardware bottlenecks, limited memory, and small datasets capped progress. Symbolic AI dominated academic research — cheap in computation, rich in abstraction, but poor in performance.

The pivotal year was 2012, when AlexNet harnessed GPUs to win the ImageNet competition with roughly 10⁹ FLOPs. This milestone inaugurated the deep-learning age. By 2017, Transformers introduced with Attention is All You Need opened the door to language models capable of human-like reasoning — but also demanded exponentially greater compute.

By 2020, OpenAI’s GPT-3 reached 3.14×10²³ FLOPs, eclipsing prior records. GPT-4 and other 2025-era models are estimated to require 10²⁵–10²⁶ FLOPs, or 100–1000× more compute than their predecessors. The doubling time of compute consumption, once every 24 months under Moore’s Law, has collapsed to roughly 5–6 months. This exponential trajectory redefines what scalability means in AI — not just bigger data, but bigger energy, capital, and ambition.

Understanding the Metric: FLOPs and PetaFLOPs

A FLOP — floating-point operation — is a single arithmetic calculation. Modern AI training counts FLOPs in the quadrillions, expressed as petaFLOPs (10¹⁵ FLOPs) or even exaFLOPs (10¹⁸) for frontier systems.

Training computation scales with four core variables: model parameters, dataset size, training epochs, and hardware efficiency. Despite hardware gains, the exponential growth in parameters and data has far outpaced efficiency improvements — resulting in near-vertical curves in Epoch’s dataset.

Quantitative Perspective: The Shape of Exponential Growth

Key Drivers of the Compute Explosion

The economics of scale in artificial intelligence have created a striking imbalance across the industry. Only a limited number of organisations now hold the resources, infrastructure, and financial capacity required for exascale AI training. These entities, supported by vast data centres, proprietary chip architectures, and immense energy budgets, dominate the frontier of large model development. Their position allows them to set the pace of progress and to define the benchmarks that the rest of the world follows.

Smaller firms and research groups, while often rich in creativity, operate within far tighter computational constraints. They advance through methods such as fine tuning existing large models, building domain specific optimisations, or collaborating within open weight and open source ecosystems. These approaches allow innovation to flourish at the edges of affordability, enabling participation in the AI landscape without access to colossal computing budgets. Yet the disparity remains clear.

This widening gap reveals a deeper structural inequality in the compute economy. The same visualisations produced by Epoch AI that track exponential growth in training computation also expose an equally rapid concentration of power. As compute demands soar, ownership consolidates around those able to sustain the costs. The result is a landscape where technological progress and economic inequality grow in tandem, reshaping not only who builds the next generation of AI systems but also who benefits from them.

Environmental Dimensions of Computation

Compute as a Geopolitical Lever

Computation has become the cornerstone of technological sovereignty in the modern world. The ability to produce, control, and deploy high performance computing resources now determines not only economic competitiveness but also strategic independence. This reality was underscored by the 2023 United States Executive Order on Artificial Intelligence, which introduced explicit regulations on compute thresholds, recognising that access to large scale computational power is directly tied to national security interests.

As artificial intelligence grows central to defence, innovation, and global influence, the focus of geopolitics has shifted towards chips, data centres, and supply chains. Export controls on advanced semiconductors, restrictions on chip manufacturing technologies, and the formation of new AI alliances are now central tools of statecraft. Nations capable of designing and fabricating high end chips hold leverage comparable to the energy superpowers of the previous century.

In this emerging order, floating point operations, or FLOPs, have taken on symbolic and strategic weight. They represent concentrated capability, a measure of digital power that is both economically valuable and politically sensitive. Like nuclear energy in the twentieth century, computation today is potent, regulated, and geopolitically charged, defining the contours of influence and control in the age of artificial intelligence.

The Emerging Compute–Capital Nexus

The relationship between computation and capital has evolved into a powerful new economic axis that defines the modern AI landscape. Compute and capital are now deeply intertwined, each reinforcing the other as essential inputs to technological progress. The valuation of AI companies is no longer determined primarily by talent or intellectual property but increasingly by their access to GPU hours, data infrastructure, and overall computational efficiency. In this emerging paradigm, the ability to secure and optimise large scale compute resources has become a decisive measure of competitive strength.

Investors have begun to treat compute as a financial asset in its own right. Efficiency ratios, training throughput, and cost per FLOP are now central to assessing an AI firm’s long term sustainability and scalability. This shift reflects the growing recognition that computation is not merely an operational expense but a form of capital investment with compounding returns when applied effectively.

At the same time, cloud service providers wield unprecedented pricing power, shaping the economics of AI development through control over access, availability, and cost structures. This concentration has created a new form of dependency in which smaller players must lease innovation capacity from a handful of global infrastructure giants. As compute access becomes stratified, the distribution of innovation follows suit, with resource rich firms able to explore the frontiers of intelligence while others innovate within the constraints of what they can afford.

The result is an economic landscape where computation and capital merge into a single engine of technological growth, one that promises extraordinary potential yet deepens structural divides across the global AI ecosystem.

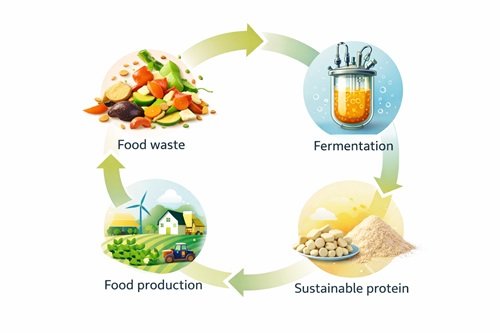

The Sustainability Equation: Compute, Carbon, and Conscience

Every increase in computational power translates into tangible energy extraction from the physical world. Each additional layer of model complexity and every surge in FLOPs represents not only technological progress but also a measurable environmental cost. As artificial intelligence systems continue to grow in scale and sophistication, the link between digital advancement and energy consumption has become impossible to ignore.

Governments and regulatory bodies are beginning to respond with policies that recognise computation as both a driver of innovation and a source of environmental strain. Mandatory carbon reporting for AI training projects is emerging as a standard requirement, compelling organisations to disclose the energy and emissions associated with model development. At the same time, new incentive structures are being introduced to encourage the design and adoption of energy efficient chips and data centres. Parallel to this, sustainability indices are being developed to benchmark AI models by their compute intensity, enabling more transparent comparisons across technologies and organisations.

Sustainability is no longer a matter of corporate ethics but a condition for long term viability. The future of artificial intelligence will be defined not only by how powerful models become, but by how responsibly that power is harnessed. Balancing performance with environmental stewardship is becoming the defining test of innovation in the age of intelligent computation.

Looking Forward: Intelligent Scaling and the Post-Exponential Era

The Human Equation Behind the Numbers

The history of artificial intelligence computation is the story of human ambition expressed through mathematics. Every rise in floating point operations represents a step closer to creating systems that can perceive, reason, and create in ways once thought impossible. Behind every algorithm and model lies the enduring desire to expand the boundaries of understanding and to capture fragments of human thought in digital form.

Yet each surge in computational power carries a cost. Progress demands vast amounts of energy, natural resources, and capital, linking the pursuit of intelligence to the physical limits of our planet. The arrival of the exascale era highlights both human brilliance and the growing need for restraint. It marks a turning point where society must decide whether to keep feeding endless consumption or to pursue a form of intelligence that grows responsibly.

True advancement will depend not on how large or fast machines become but on how thoughtfully they are developed. The future of artificial intelligence lies in sustainable cognition, where progress strengthens rather than depletes the world around it. Computation is not destiny, it is direction, and its path will be shaped by the wisdom of our choices.

References

- Epoch AI (2025) – with major processing by Our World in Data. “Training computation (petaFLOP)” dataset. Our World in Data, retrieved from https://ourworldindata.org/grapher/artificial-intelligence-training-computation.

- Executive Order on Artificial Intelligence, United States, October 2023.

- Amodei, D. & Hernandez, D. (2018). “AI and Compute.” OpenAI Research Note.

- Our World in Data (2025). “Computation used to train notable artificial intelligence systems, by domain.” Metadata documentation.