Amazon Web Services' notorious US-EAST-1 region endured yet another disruption on Monday evening, barely a fortnight after a catastrophic 15-hour outage crippled online services globally.

The fresh incident began at 10:36pm UTC (3:36pm Pacific Time) on 28 October, when the cloud computing giant acknowledged that some EC2 launches within its use1-az2 Availability Zone were experiencing elevated latencies. Amazon throttled certain EC2 resource requests, advising customers that retrying would likely resolve the issue.

Cascading Failures Expose Systemic Vulnerabilities

The troubles extended beyond compute resources, triggering task launch failures for Elastic Container Service (ECS) operations—both EC2 and Fargate variants—affecting a subset of US-EAST-1 customers. Amazon's status dashboard revealed that a "small number" of ECS cells were experiencing heightened error rates when launching new tasks, with existing tasks stopping unexpectedly in some circumstances.

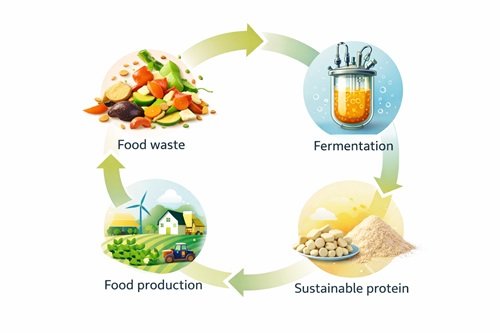

The incident's ripple effects demonstrated the precarious nature of cloud infrastructure interdependencies. EMR Serverless services, which Amazon uses to run big data frameworks such as Hadoop and Spark, fell victim to the ECS disruption. The service maintains "warm pools" of ECS clusters to handle customer requests, and some of these clusters were operating within the affected ECS cells.

Progress Delayed as Recovery Takes Hold

By 5:31pm Pacific Time, Amazon reported it was "actively working on refreshing these warm pools with healthy clusters" and had "made progress on recovering impacted ECS cells," though warned this progress remained invisible to external observers. The company estimated recovery would take approximately two to three hours.

Amazon eventually resolved the matter at 05:57 UTC on 29 October (10:57pm Pacific Time on 28 October), according to its final status update.

Déjà Vu: The Dependency Dilemma

This incident marks an uncomfortable echo of the major outage on 20 October, when DNS resolution failures for DynamoDB service endpoints sparked a cascading failure across the region. That earlier disruption demonstrated how AWS's own services depend heavily upon one another—a vulnerability that remains unresolved.

In Monday's incident, the problems with EMR Serverless stemming from ECS issues once again highlighted how internal dependencies render the Amazonian cloud brittle. The architecture sees multiple services relying on the stable operation of foundational components, creating single points of failure that can topple entire service stacks.

Ten Services Impacted, Yet Reports Remain Scarce

AWS listed ten affected services: App Runner, Batch, CodeBuild, Fargate, Glue, EMR Serverless, EC2, ECS, and the Elastic Kubernetes Service. However, widespread service disruption reports remained notably absent at the time.

This relative quiet may be attributed to US-EAST-1's six availability zones, providing customers who implemented multi-zone architectures with functioning alternatives. Additionally, the partial nature of the outage meant AWS likely maintained spare capacity within the impacted availability zone that customers could access instead of the throttled or failed resources.

The incident serves as yet another reminder that concentration risk in cloud computing remains a pressing concern, with the region dubbed "the crown jewel of Amazon's cloud infrastructure" continuing to prove vulnerable despite hosting countless production workloads.

AWS has not disclosed the root cause of Monday's disruption.